10 Inference for Regression

In this chapter, we revisit the regression model studied in Chapters 5 and 6. We do it by taking into account the inferential statistics methods introduced in Chapters 8 and 9. We will show that when applying the linear regression methods introduced earlier on sample data, we can gain insight into the relationships between the response and explanatory variables of an entire population.

Needed packages

If needed, read Section 1.3 for information on how to install and load R packages.

Recall that loading the tidyverse package loads many packages that we have encountered earlier. For details refer to Section 4.4. The packages moderndive and infer contain functions and data frames that will be used in this chapter.

10.1 The simple linear regression model

10.1.1 UN member states revisited

We briefly review the example of UN member states covered in Section 5.1.

Data on the current UN member states, as of 2024, can be found in the un_member_states_2024 data frame included in the moderndive package.

As we did in Section 5.1, we save these data as a new data frame called UN_data_ch10, select() the required variables, and include rows without missing data using na.omit():

UN_data_ch10 <- un_member_states_2024 |>

select(country,

life_exp = life_expectancy_2022,

fert_rate = fertility_rate_2022)|>

na.omit()

UN_data_ch10| column | n | group | type | min | Q1 | mean | median | Q3 | max | sd |

|---|---|---|---|---|---|---|---|---|---|---|

| life_exp | 183 | numeric | 53.6 | 69.4 | 73.66 | 75.2 | 78.3 | 86.4 | 6.84 | |

| fert_rate | 183 | numeric | 0.9 | 1.6 | 2.48 | 2.0 | 3.2 | 6.6 | 1.15 |

Above we show the summary of the two numerical variables.

Observe that there are 183 observations without missing values.

Using simple linear regression between the response variable fertility rate (fert_rate) or \(y\), and the regressor life expectancy (life_exp) or \(x\), the regression line is:

\[ \widehat{y}_i = b_0 + b_1 \cdot x_i. \]

We have presented this equation in Section 5.1, but we now add the subscript \(i\) to represent the \(i\)th observation or country in the UN dataset, and we let \(i = 1\), \(\dots\), \(n\) with \(n = 183\) for this UN data. The value \(x_i\) represents the life expectancy value for the \(i\)th member state, and \(\widehat{y}_i\) is the fitted fertility rate for the \(i\)th member state. The fitted fertility rate is the result of the regression line and is typically different than the observed response \(y_i\). The residual is given as the difference \(y_i - \widehat{y}_i\).

As discussed in Subsection 5.3.2, the intercept (\(b_0\)) and slope (\(b_1\)) are the regression coefficients, such that the regression line is the “best-fitting” line based on the least-squares criterion. In other words, the fitted values \(\widehat{y}\) calculated using the least-squares coefficients (\(b_0\) and \(b_1\)) minimize the sum of the squared residuals:

\[ \sum_{i=1}^{n}(y_i - \widehat{y}_i)^2 \]

As we did in Section 5.1, we fit the linear regression model.

By “fit” we mean to calculate the regression coefficients, \(b_0\) and \(b_1\), that minimize the sum of squared residuals.

To do this in R, we use the lm() function with the formula fert_rate ~ life_exp and save the solution in simple_model:

| Coefficients | Values | |

|---|---|---|

| (Intercept) | b0 | 12.613 |

| life_exp | b1 | -0.137 |

The regression line is \(\widehat{y}_i = b_0 + b_1 \cdot x_i = 12.613 - 0.137 \cdot x_i\), where \(x_i\) is the life expectancy for the \(i\)th country and \(\widehat{y}_i\) is the corresponding fitted fertility rate. The \(b_0\) coefficient is the intercept and has a meaning only if the range of values of the regressor, \(x_i\), includes zero. Since life expectancy is always a positive value, we do not provide any interpretation to the intercept in this example. The \(b_1\) coefficient is the slope of the regression line; for any country, if the life expectancy were to increase by about one year, we would expect an associated reduction of the fertility rate by about 0.137 units.

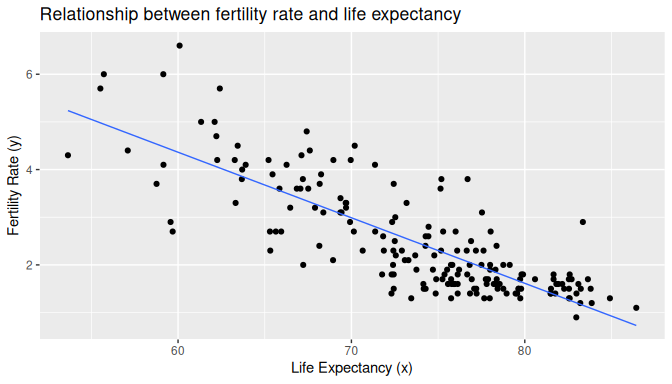

We visualize the relationship of the data observed in Figure 10.1 by plotting the scatterplot of fertility rate against life expectancy for all the UN member states with complete data. We also include the regression line using the least-squares criterion:

ggplot(UN_data_ch10, aes(x = life_exp, y = fert_rate)) +

geom_point() +

labs(x = "Life Expectancy (x)",

y = "Fertility Rate (y)",

title = "Relationship between fertility rate and life expectancy") +

geom_smooth(method = "lm", se = FALSE, linewidth = 0.5)

FIGURE 10.1: Relationship with regression line.

Finally, we review how to determine the fitted values and residuals for observations in the dataset.

France is one of the UN member states, and suppose we want to determine the fitted fertility rate for France based on the linear regression.

We start by determining what is the location of France in the UN_data_ch10 data frame, using rowid_to_column() and filter() with the variable country equal to “France.”

The pull() function converts the row number as a data frame to a single value:

UN_data_ch10 |>

rowid_to_column() |>

filter(country == "France")|>

pull(rowid)[1] 57France is the 57th member state in UN_data_ch10. Its observed fertility rate and life expectancy are:

UN_data_ch10 |>

filter(country == "France")# A tibble: 1 × 3

country life_exp fert_rate

<chr> <dbl> <dbl>

1 France 82.59 1.8France’s life expectancy is \(x_{57} = 82.59\) years and the fertility rate is \(y_{57} =1.8\). Using the regression line from earlier, we can determine France’s fitted fertility rate:

\[ \begin{aligned} \widehat{y}_{57} &= 12.613 - 0.137 \cdot x_{57}\\ &= 12.613 - 0.137 \cdot 82.59\\ &= 1.258. \end{aligned} \]

Based on our regression line we would expect France’s fertility rate to be 1.258. The observed fertility rate for France was 1.8, so the residual for France is \(y_{57} - \widehat{y}_{57} = 1.8 - 1.258 = 0.542\).

Using R we are not required to manually calculate the fitted values and residual for each UN member state.

We do this directly using the regression model simple_model and the get_regression_points() function.

To do this only for France, we filter() the 57th observation in the data frame.

simple_model |>

get_regression_points() |>

filter(ID == 57)| ID | fert_rate | life_exp | fert_rate_hat | residual |

|---|---|---|---|---|

| 57 | 1.8 | 82.6 | 1.26 | 0.542 |

We can retrieve this information for each observation. Here we show the first few rows:

simple_model |>

get_regression_points()# A tibble: 183 × 5

ID fert_rate life_exp fert_rate_hat residual

<int> <dbl> <dbl> <dbl> <dbl>

1 1 4.3 53.65 5.237 -0.937

2 2 1.4 79.47 1.687 -0.287

3 3 2.7 78.03 1.885 0.815

4 4 5 62.11 4.074 0.926

5 5 1.6 77.8 1.916 -0.316

6 6 1.9 78.31 1.846 0.054

7 7 1.6 76.13 2.146 -0.546

8 8 1.6 83.09 1.189 0.411

9 9 1.5 82.27 1.302 0.198

10 10 1.6 74.15 2.418 -0.818

# ℹ 173 more rowsThis concludes our review of material covered in Section 5.1. We now explain how to use this information for statistical inference.

10.1.2 The model

As we did in Chapters 8 on confidence intervals and 9 on hypothesis testing, we present this problem in the context of a population and associated parameters of interest. We then collect a random sample from this population and use it to estimate these parameters.

We assume that this population has a response variable (\(Y\)), an explanatory variable (\(X\)), and there is a statistical linear relationship between these variables, given by the linear model

\[Y = \beta_0 + \beta_1 \cdot X + \epsilon,\]

where \(\beta_0\) is the population intercept and \(\beta_1\) is the population slope. These are now the parameters of the model that alongside the explanatory variable (\(X\)) produce the equation of a line. The statistical part of this relationship is given by \(\epsilon\), a random variable called the error term. The error term accounts for the portion of \(Y\) that is not explained by the line.

We make additional assumptions about the distribution of the error term, \(\epsilon\). The assumed expected value of the error term is zero, and the assumed standard deviation is equal to a positive constant called \(\sigma\), or in mathematical terms: \(E(\epsilon) = 0\) and \(SD(\epsilon) = \sigma.\)

We review the meaning of these quantities. If you were to take a large number of observations from this population, we would expect the error terms sometimes to be greater than zero and sometimes less than zero, but on average, be equal to zero. Similarly, some error terms will be very close to zero and others very far from zero, but on average, we would expect them to be roughly \(\sigma\) units away from zero.

Recall the square of the standard deviation is called the variance, so \(Var(\epsilon) = \sigma^2\). The variance of the error term is equal to \(\sigma^2\) regardless of the value of \(X\). This property is called homoskedasticity or constancy of the variance. It will be useful later on in our analysis.

10.1.3 Using a sample for inference

As we did in Chapters 8 and 9, we use a sample to estimate the parameters in the population.

We use data collected from the Old Faithful Geyser in Yellowstone National Park in Wyoming, USA.

This dataset contains the duration of the geyser eruption in seconds and the waiting time to the next eruption in minutes.

The duration of the current eruption can help determine fairly well the waiting time to the next eruption.

For this example, we use a sample of data collected by volunteers and saved on the website https://geysertimes.org/ between June 1st, 2024 and August 19th, 2024.

These data are stored in the old_faithful_2024 data frame in the moderndive package.

While data collected by volunteers are not a random sample, as the volunteers could introduce some sort of bias, the eruptions selected by the volunteers had no specific patterns.

Further, beyond the individual skill of each volunteer measuring the times appropriately, no response bias or preference seems to be present.

Therefore, it seems safe to consider the data a random sample. The first ten rows are shown here:

old_faithful_2024# A tibble: 114 × 6

eruption_id date time waiting webcam duration

<dbl> <date> <dbl> <dbl> <chr> <dbl>

1 1473854 2024-08-19 538 180 Yes 235

2 1473352 2024-08-15 1541 184 Yes 259

3 1473337 2024-08-15 1425 116 Yes 137

4 1473334 2024-08-15 1237 188 Yes 222

5 1473331 2024-08-15 1131 106 Yes 105

6 1473328 2024-08-15 944 187 Yes 180

7 1473207 2024-08-14 1231 182 Yes 244

8 1473201 2024-08-14 1041 190 Yes 278

9 1473137 2024-08-13 1810 138 Yes 249

10 1473108 2024-08-13 1624 186 Yes 262

# ℹ 104 more rowsBy looking at the first row we can tell, for example, that an eruption on August 19, 2024, at 5:38 AM lasted 235 seconds, and the waiting time for the next eruption was 180 minutes. We next display the summary for these two variables:

old_faithful_2024 |>

select(duration, waiting) |>

tidy_summary()| column | n | group | type | min | Q1 | mean | median | Q3 | max | sd |

|---|---|---|---|---|---|---|---|---|---|---|

| duration | 114 | numeric | 99 | 180 | 217 | 240 | 259 | 300 | 59.0 | |

| waiting | 114 | numeric | 102 | 139 | 160 | 176 | 184 | 201 | 29.9 |

We have a sample of 114 eruptions, lasting between 99 seconds and 300 seconds, and the waiting time to the next eruption was between 102 minutes and 201 minutes. Observe that each observation is a pair of values, the value of the explanatory variable (\(X\)) and the value of the response (\(Y\)). The sample takes the form:

\[\begin{array}{c} (x_1,y_1)\\ (x_2, y_2)\\ \vdots\\ (x_n, y_n)\\ \end{array}\]

where, for example, \((x_2, y_2)\) is the pair of explanatory and response values, respectively, for the second observation in the sample. More generally, we denote the \(i\)th pair by \((x_i, y_i)\), where \(x_i\) is the observed value of the explanatory variable \(X\) and \(y_i\) is the observed value of the response variable \(Y\). Since the sample has \(n\) observations we let \(i=1\), \(\dots\), \(n\).

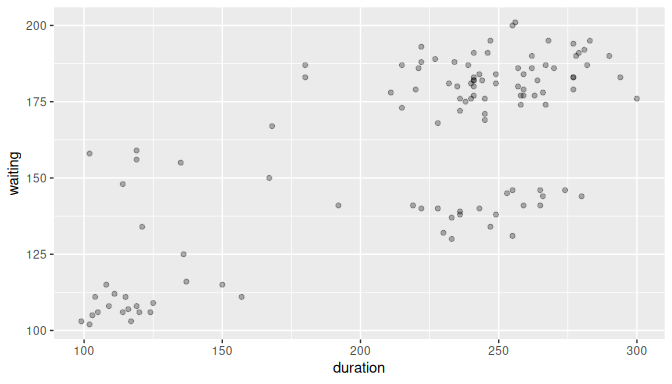

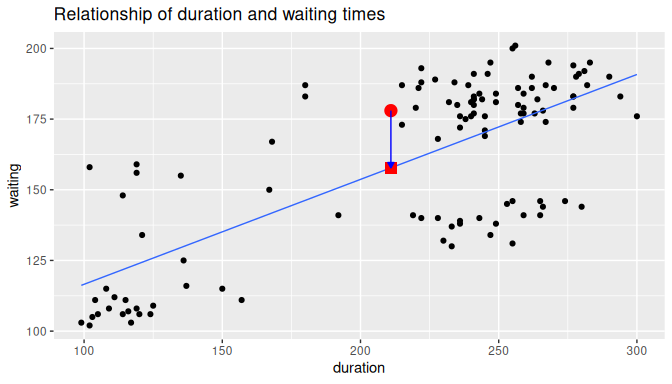

In our example \(n = 114\), and \((x_2, y_2) = (259, 184)\). Figure 10.2 shows the scatterplot for the entire sample with some transparency set to check for overplotting:

FIGURE 10.2: Scatterplot of relationship of eruption duration and waiting time.

The relationship seems positive and, to some extent, linear.

10.1.4 The method of least squares

If the association of these variables is linear or approximately linear, we can apply the linear model described in Subsection 10.1.2 to each observation in the sample:

\[\begin{aligned} y_1 &= \beta_0 + \beta_1 \cdot x_1 + \epsilon_1\\ y_2 &= \beta_0 + \beta_1 \cdot x_2 + \epsilon_2\\ \vdots & \phantom{= \beta_0 + \beta_1 \cdot + \epsilon_2 +}\vdots \\ y_n &= \beta_0 + \beta_1 \cdot x_n + \epsilon_n \end{aligned}\]

We want to be able to use this model to describe the relationship between the explanatory variable and the response, but the parameters \(\beta_0\) and \(\beta_1\) are unknown to us. We estimate these parameters using the random sample by applying the least-squares method introduced in Section 5.1. We compute the estimators for the intercept (\(\beta_0\)) and slope (\(\beta_1\)) that minimize the sum of squared residuals:

\[\sum_{i=1}^n \left[y_i - (\beta_0 + \beta_1 \cdot x_i)\right]^2.\]

This is an optimization problem and to solve it analytically we require calculus and the topic goes beyond the scope of this book. We provide a sketch of the solution here for those familiar with the method: using the expression above we find the partial derivative with respect to \(\beta_0\) and equate that expression to zero, the partial derivative with respect to \(\beta_1\) and equate that expression to zero, and use those two equations to solve for \(\beta_0\) and \(\beta_1\). The solutions are the regression coefficients introduced first in Section 5.1: \(b_0\) is the estimator of \(\beta_0\) and \(b_1\) is the estimator of \(\beta_1\). They are called the least squares estimators and their mathematical expressions are:

\[b_1 = \frac{\sum_{i=1}^n(x_i - \bar x)(y_i - \bar y)}{\sum_{i=1}^n(x_i - \bar x)^2} \text{ and } b_0 = \bar y - b_1 \cdot \bar x.\]

Furthermore, an estimator for the standard deviation of \(\epsilon_i\) is given by

\[s = \sqrt{\frac{\sum_{i=1}^n \left[y_i - (b_0 + b_1 \cdot x_i)\right]^2}{n-2}} = \sqrt{\frac{\sum_{i=1}^n \left(y_i - \widehat{y}_i\right)^2}{n-2}}.\]

These or equivalent calculations are done in R when using the lm() function.

For old_faithful_2024 we get the results shown in Table

10.1:

# Fit regression model:

model_1 <- lm(waiting ~ duration, data = old_faithful_2024)

# Get the coefficients and standard deviation for the model

coef(model_1)

sigma(model_1)| Coefficients | Values | |

|---|---|---|

| (Intercept) | b0 | 79.459 |

| duration | b1 | 0.371 |

| s | 20.370 |

Based on these data and assuming the linear model is appropriate, we can say that for every additional second that an eruption lasts, the waiting time to the next eruption increases, on average, by 0.37 minutes. Any eruption lasts longer than zero seconds, so the intercept has no meaningful interpretation in this example. Finally, we roughly expect the waiting time for the next eruption to be 20.37 minutes away from the regression line value, on average.

10.1.5 Properties of the least squares estimators

The least squares method produces the best-fitting line by selecting the least squares estimators, \(b_0\) and \(b_1\), that make the sum of residual squares the smallest possible. But the choice of \(b_0\) and \(b_1\) depends on the sample observed. For every random sample taken from the data, different values for \(b_0\) and \(b_1\) will be determined. In that sense, the least squares estimators, \(b_0\) and \(b_1\), are random variables and as such, they have very useful properties:

- \(b_0\) and \(b_1\) are unbiased estimators of \(\beta_0\) and \(\beta_1\), or using mathematical notation: \(E(b_0) = \beta_0\) and \(E(b_1) = \beta_1\). This means that, for some random samples, \(b_1\) will be greater than \(\beta_1\) and for others less than \(\beta_1\). On average, \(b_1\) will be equal to \(\beta_1\).

- \(b_0\) and \(b_1\) are linear combinations of the observed responses \(y_1\), \(y_2\), \(\dots\), \(y_n\). This means that, for example for \(b_1\), there are known constants \(c_1\), \(c_2\), \(\dots\), \(c_n\) such that \(b_1 = \sum_{i=1}^n c_iy_i\).

- \(s^2\) is an unbiased estimator of the variance \(\sigma^2\).

These properties will be useful in the next subsection, once we perform theory-based inference for regression.

10.1.6 Relating basic regression to other methods

To wrap-up this section, we’ll be investigating how regression relates to two different statistical techniques. One of them was covered already in this book, the difference in sample means, and the other is new to the text but is related, ANOVA. We’ll see how both can be represented in the regression framework.

Two-sample difference in means

The two-sample difference in means is a common statistical technique used to compare the means of two groups as seen in Section 9.6. It is often used to determine if there is a significant difference in the mean response between two groups, such as a treatment group and a control group. The two-sample difference in means can be represented in the regression framework by using a dummy variable to represent the two groups.

Let’s again consider the movies_sample data frame in the moderndive package. We’ll compare once more the average rating for the genres of “Action” versus “Romance.” We can use the lm() function to fit a linear model with a dummy variable for the genre and then use get_regression_table():

mod_diff_means <- lm(rating ~ genre, data = movies_sample)

get_regression_table(mod_diff_means)| term | estimate | std_error | statistic | p_value | lower_ci | upper_ci |

|---|---|---|---|---|---|---|

| intercept | 5.28 | 0.265 | 19.92 | 0.000 | 4.746 | 5.80 |

| genre: Romance | 1.05 | 0.364 | 2.88 | 0.005 | 0.321 | 1.77 |

Note from Table 10.2 that p_value for the genre: Romance row is the \(p\)-value for the hypothesis test of

\[ H_0: \text{action and romance have the same mean rating} \] \[ H_A: \text{action and romance have different mean ratings} \]

This \(p\)-value result matches closely with what was found in Section 9.6, but here we are using a theory-based approach with a linear model. The estimate for the genre: Romance row is the observed difference in means between the “Action” and “Romance” genres that we also saw in Section 9.6, except the sign is switched since the “Action” genre is the reference level.

ANOVA

ANOVA, or analysis of variance, is a statistical technique used to compare the means of three or more groups by seeing if there is a statistically significant difference between the means of multiple groups. ANOVA can be represented in the regression framework by using dummy variables to represent the groups. Let’s say we wanted to compare the popularity (numeric) values in the spotify_by_genre data frame from the moderndive package across the genres of country, hip-hop, and rock. We use the slice_sample() function after narrowing in on our selected columns and filtered rows of interest to see what a few rows of this data frame look like in Table 10.3.

spotify_for_anova <- spotify_by_genre |>

select(artists, track_name, popularity, track_genre) |>

filter(track_genre %in% c("country", "hip-hop", "rock"))

spotify_for_anova |>

slice_sample(n = 5)| artists | track_name | popularity | track_genre |

|---|---|---|---|

| Counting Crows | Mr. Jones | 0 | rock |

| Luke Bryan | Country Girl (Shake It For Me) | 2 | country |

| Salebarbes | Marcher l’plancher - Live | 42 | country |

| Darius Rucker | Wagon Wheel | 1 | country |

| Billy Joel | Vienna | 78 | rock |

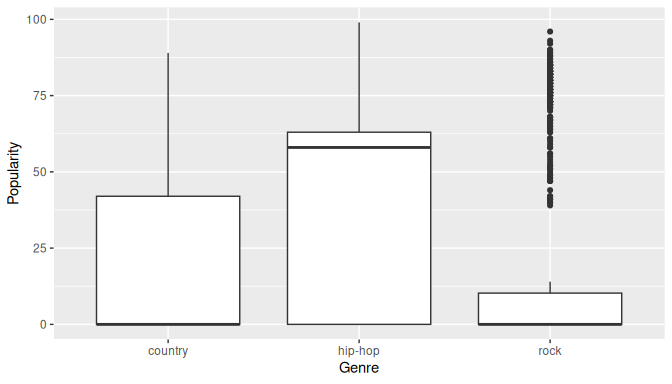

Before we fit a linear model, let’s take a look at the boxplot of track_genre versus popularity in Figure 10.3 to see if there are any differences in the distributions of the three genres.

ggplot(spotify_for_anova, aes(x = track_genre, y = popularity)) +

geom_boxplot() +

labs(x = "Genre", y = "Popularity")

FIGURE 10.3: Boxplot of popularity by genre.

We can also compute the mean popularity grouping by track_genre:

mean_popularities_by_genre <- spotify_for_anova |>

group_by(track_genre) |>

summarize(mean_popularity = mean(popularity))

mean_popularities_by_genre# A tibble: 3 × 2

track_genre mean_popularity

<chr> <dbl>

1 country 17.028

2 hip-hop 37.759

3 rock 19.001We can use the lm() function to fit a linear model with dummy variables for the genres. We’ll then use the get_regression_table() function to get the regression table in Table 10.4.

mod_anova <- lm(popularity ~ track_genre, data = spotify_for_anova)

get_regression_table(mod_anova)| term | estimate | std_error | statistic | p_value | lower_ci | upper_ci |

|---|---|---|---|---|---|---|

| intercept | 17.03 | 0.976 | 17.45 | 0.000 | 15.114 | 18.94 |

| track_genre: hip-hop | 20.73 | 1.380 | 15.02 | 0.000 | 18.025 | 23.44 |

| track_genre: rock | 1.97 | 1.380 | 1.43 | 0.153 | -0.733 | 4.68 |

The estimate for the track_genre: hip-hop and track_genre: rock rows are the differences in means between the “hip-hop” and “country” genres and the “rock” and “country” genres, respectively. The “country” genre is the reference level. These values match up (with some rounding differences) to what is shown in mean_popularities_by_genre.

The p_value column corresponds to hip-hop having a statistically higher mean popularity compared to country with a value of close to 0 (reported as 0). It also gives us that rock does not have a statistically significant \(p\)-value at 0.153, which would make us inclined to say that rock does not have a significantly higher popularity compared to country.

The traditional ANOVA doesn’t give this level of granularity. It can be performed using the aov() function and the anova() function via a pipe (|>):

Analysis of Variance Table

Response: popularity

Df Sum Sq Mean Sq F value Pr(>F)

track_genre 2 261843 130922 137 <0.0000000000000002 ***

Residuals 2997 2855039 953

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The small \(p\)-value here of 2.2e-16 is very close to 0, which would lead us to reject the null hypothesis that the mean popularities are equal across the three genres. This is consistent with the results we found using the linear model. The traditional ANOVA results do not tell us which means are different from each other though, but the linear model does. ANOVA tells us only that a difference exists in the means of the groups.

Learning check

(LC10.1) What does the error term \(\epsilon\) in the linear model \(Y = \beta_0 + \beta_1 \cdot X + \epsilon\) represent?

- A. The exact value of the response variable.

- B. The predicted value of the response variable based on the model.

- C. The part of the response variable not explained by the line.

- D. The slope of the linear relationship between \(X\) and \(Y\).

(LC10.2) Which of the following is a property of the least squares estimators \(b_0\) and \(b_1\)?

- A. They are biased estimators of the population parameters \(\beta_0\) and \(\beta_1\).

- B. They are linear combinations of the observed responses \(y_1, y_2, \ldots, y_n\).

- C. They are always equal to the population parameters \(\beta_0\) and \(\beta_1\).

- D. They depend on the specific values of the explanatory variable \(X\) only.

(LC10.3) How can the difference in means between two groups be represented in a linear regression model?

- A. By adding an interaction term between the groups and the response variable.

- B. By fitting separate regression lines for each group and comparing their slopes.

- C. By including a dummy variable to represent the groups.

- D. By subtracting the mean of one group from the mean of the other and using this difference as the predictor.

10.2 Theory-based inference for simple linear regression

This section introduces the conceptual framework needed for theory-based inference for regression (see Subsections 10.2.1 and 10.2.2) and discusses the two most prominent methods for inference: confidence intervals (Subsection 10.2.3) and hypothesis tests (Subsection 10.2.4). Some of this material is slightly more technical than other sections in this chapter, but most of the material is illustrated by working with a real example and interpretations and explanations complement the theory. Subsection 10.2.5 presents the R code needed to calculate relevant quantities for inference. Feel free to read this section first.

10.2.1 Conceptual framework

We start by reviewing the assumptions of the linear model. We continue using the old_faithful_2024 to illustrate some of this framework. Recall that we have a random sample of \(n = 114\) observations.

Since we assume a linear relationship between the duration of an eruption and the waiting time to the next eruption, we can express the linear relationship for the \(i\)th observation as \(y_i = \beta_0 + \beta_1 \cdot x_i + \epsilon_i\) for \(i=1,\dots,n\). Observe that \(x_i\) is the duration of the \(i\)th eruption in the sample, \(y_i\) is the waiting time to the next eruption, and \(\beta_0\) and \(\beta_1\) are the population parameters that are considered constant.

The error term \(\epsilon_i\) is a random variable that represents how different the observed response \(y_i\) is from the expected response \(\beta_0 + \beta_1 \cdot x_i\).

We can illustrate the role of the error term using two observations from our old_faithful_2024 dataset.

We assume for now that the linear model is appropriate and truly represents the relationship between duration and waiting times.

We select the 49th and 51st observations in our sample by using the function slice() with the corresponding rows:

# A tibble: 2 × 6

eruption_id date time waiting webcam duration

<dbl> <date> <dbl> <dbl> <chr> <dbl>

1 1469584 2024-07-18 1117 139 Yes 236

2 1469437 2024-07-17 1157 176 Yes 236Observe that the duration time is the same for both observations, but the response waiting time is different.

Assuming that the linear model is appropriate, both responses can be expressed as:

\[\begin{aligned} y_{49} &= \beta_0 + \beta_1 \cdot 236 + \epsilon_{49}\\ y_{51} &= \beta_0 + \beta_1 \cdot 236 + \epsilon_{51} \end{aligned}\]

but \(y_{49} = 139\) and \(y_{51} = 176\). The difference in responses is due to the error term as it accounts for variation in the response not accounted for by the linear model.

In the linear model the error term \(\epsilon_i\) has expected value \(E(\epsilon_i) = 0\) and standard deviation \(SD(\epsilon_i) = \sigma\). Since a random sample is taken, we assume that any two error terms \(\epsilon_i\) and \(\epsilon_j\) for any two different eruptions \(i\) and \(j\) are independent.

In order to perform the theory-based inference we require one additional assumption. We let the error term be normally distributed with an expected value (mean) equal to zero and a standard deviation equal to \(\sigma\):

\[\epsilon_i \sim Normal(0, \sigma).\]

The population parameters \(\beta_0\) and \(\beta_1\) are constants.

Similarly, the duration of the \(i\)th eruption, \(x_i\), is known and also a constant.

Therefore, the expression \(\beta_0 + \beta_1 \cdot x_i\) is a constant. By contrast, \(\epsilon_i\) is a normally distributed random variable.

The response \(y_i\) (the waiting time for the \(i\)th eruption to the next) is the sum of the constant \(\beta_0 + \beta_1 \cdot x_i\) and the normally distributed random variable \(\epsilon_i\).

Based on properties of random variables and the normal distribution, we can state that \(y_i\) is also a normally distribution random variable with mean equal to \(\beta_0 + \beta_1 \cdot x_i\) and standard deviation equal to \(\sigma\):

\[y_i \sim Normal(\beta_0 + \beta_1 x_i\,,\, \sigma)\]

for \(i=1,\dots,n\). Since \(\epsilon_i\) and \(\epsilon_j\) are independent, \(y_i\) and \(y_j\) are also independent for any \(i \ne j\).

In addition, as stated in Subsection 10.1.5, the least-squares estimator \(b_1\) is a linear combination of the random variables \(y_1, \dots, y_n\).

So \(b_1\) is also a random variable!

What does this mean?

The coefficient for the slope results from a particular sample of \(n\) pairs of duration and waiting times.

If we collected a different sample of \(n\) pairs, the coefficient for the slope would likely be different due to sampling variation.

Say we hypothetically collect many random samples of pairs of duration and waiting times, and using the least-squares method compute the slope \(b_1\) for each of these samples.

These slopes would form the sampling distribution of \(b_1\), which we discussed in Subsection 7.3.4 in the context of sample proportions.

What we would learn is that, because \(y_1, \dots, y_n\) are normally distributed and \(b_1\) is a linear combination of these random variables, \(b_1\) is also normally distributed.

After some calculations that go beyond the scope of this book but take into account properties of the expected value and standard deviation of the responses \(y_1, \dots, y_n\), it can be shown that:

\[b_1 \sim Normal \left(\beta_1\,,\, \frac{\sigma}{\sqrt{\sum_{i=1}^n(x_i - \bar x)^2}}\right)\]

That is, \(b_1\) is normally distributed with expected value \(\beta_1\) and standard deviation equal to the expression above (inside the parentheses and after the comma). Similarly, \(b_0\) is a linear combination of \(y_1, \dots, y_n\) and using properties of the expected value and standard deviation of the responses, we get:

\[b_0 \sim Normal \left(\beta_0\,,\, \sigma\sqrt{\frac1n + \frac{\bar x^2}{\sum_{i=1}^n(x_i - \bar x)^2}}\right)\]

We can also standardize the least-square estimators such that

\[z_0 = \frac{b_0 - \beta_0}{\left(\sigma\sqrt{\frac1n + \frac{\bar x^2}{\sum_{i=1}^n(x_i - \bar x)^2}}\right)}\qquad\text{ and }\qquad z_1 = \frac{b_1 - \beta_1}{\left(\frac{\sigma}{\sqrt{\sum_{i=1}^n(x_i - \bar x)^2}}\right)}\]

are the corresponding standard normal distributions.

10.2.2 Standard errors for least-squares estimators

Recall that in Chapter 7 and in Subsection 7.4.6 we discussed that, due to the Central Limit Theorem, the distribution of the sample mean \(\overline X\) was approximately normal with mean equal to the parameter \(\mu\) and standard deviation equal to \(\sigma/\sqrt n\). We then used the estimated standard error of \(\overline X\) to construct confidence intervals and hypothesis tests.

An analogous treatment is now used to construct confidence intervals and hypothesis tests for \(b_0\) and \(b_1\). Observe in the equations above that the standard deviations for \(b_0\) and \(b_1\) are constructed using the sample size \(n\), the values of the explanatory variables, their means, and the standard deviation of \(y_i\) (\(\sigma\)). While most of these values are known to us, \(\sigma\) is typically not.

Instead, we estimate \(\sigma\) using the estimator of the standard deviation, \(s\), introduced in Subsection 10.1.4. The estimated standard deviation of \(b_1\) is called the standard error of \(b_1\), and it is given by:

\[SE(b_1) = \frac{s}{\sqrt{\sum_{i=1}^n(x_i - \bar x)^2}}.\]

Recall that the standard error is the standard deviation of any point estimate computed from a sample. The standard error of \(b_1\) quantifies how much variation the estimator of the slope \(b_1\) may have for different random samples. The larger the standard error, the more variation we would expect in the estimated slope \(b_1\). Similarly, the standard error of \(b_0\) is:

\[SE(b_0) = s\sqrt{\frac1n + \frac{\bar x^2}{\sum_{i=1}^n(x_i - \bar x)^2}}\]

As was discussed in Subsection 8.1.4, when using the estimator \(s\) instead of the parameter \(\sigma\), we are introducing additional uncertainty in our calculations. For example, we can standardize \(b_1\) using

\[t = \frac{b_1 - \beta_1}{SE(b_1)}.\]

Because we are using \(s\) to calculate \(SE(b_1)\), the value of the standard error changes from sample to sample, and this additional uncertainty makes the distribution of the test statistic \(t\) no longer normal. Instead, it follows a \(t\)-distribution with \(n-2\) degrees of freedom. The loss of two degrees of freedom relates to the fact that we are trying to estimate two parameters in the linear model: \(\beta_0\) and \(\beta_1\). We are ready to use this information to perform inference for the least-square estimators, \(b_0\) and \(b_1\).

10.2.3 Confidence intervals for the least-squares estimators

A 95% confidence interval for \(\beta_1\) can be thought of as a range of plausible values for the population slope \(\beta_1\) of the linear relationship between duration and waiting times.

In general, if the sampling distribution of an estimator is normal or approximately normal, the confidence interval for the relevant parameter is

\[ \text{point estimate} \pm \text{margin of error} = \text{point estimate} \pm (\text{critical value} \cdot \text{standard error}). \]

The formula for a 95% confidence interval for \(\beta_1\) is given by \(b_1 \pm q \cdot SE(b_1)\) where the critical value \(q\) is determined by the level of confidence required, the sample size used, and the corresponding degrees of freedom needed for the \(t\)-distribution.

We now illustrate how to find the 95% confidence interval for the slope in the Old Faithful example manually, but we show later how to do this directly in R using the function get_regression_table().

First, observe that \(n = 114\), so the degrees of freedom are \(n-2 = 112\). The critical value for a 95% confidence interval on a \(t\)-distribution with 112 degrees of freedom is \(q = 1.981\). Second, the estimates \(b_0\), \(b_1\), and \(s\) were found earlier and are shown here again in Table 10.5:

| Coefficients | Values | |

|---|---|---|

| (Intercept) | b0 | 79.459 |

| duration | b1 | 0.371 |

| s | 20.370 |

Third, the standard error for \(b_1\) using the formula presented earlier is:

\[SE(b_1) = \frac{s}{\sqrt{\sum_{i=1}^n(x_i - \bar x)^2}} = \frac{20.37}{627.583} = 0.032.\]

Finally, the 95% confidence interval for \(\beta_1\) is given by:

\[\begin{aligned} b_1 &\pm q \cdot SE(b_1)\\ &= 0.371 \pm 1.981\cdot 0.032\\ &= (0.308 , 0.434) \end{aligned}\]

We are 95% confident that the population slope \(\beta_1\) is a number between 0.308 and 0.434.

The construction of a 95% confidence interval for \(\beta_0\) follows exactly the same steps using \(b_0\), \(SE(b_0)\), and the same critical value \(q\) as the degrees of freedom for the \(t\)-distribution are exactly the same, \(n-2\):

\[\begin{aligned} b_0 &\pm q \cdot SE(b_0)\\ &= 79.459 \pm 1.981\cdot 7.311\\ &= (-14.112, 14.854) \end{aligned}\]

The results of the confidence intervals are valid only if the linear model assumptions are satisfied. We discuss these assumptions in Section 10.2.6.

10.2.4 Hypothesis test for population slope

To perform a hypothesis test for \(\beta_1\), the general formulation of a two-sided test is

\[\begin{aligned} H_0: \beta_1 = B\\ H_A: \beta_1 \ne B \end{aligned}\]

where \(B\) is the hypothesized value for \(\beta_1\). Recall the terminology, notation, and definitions related to hypothesis tests we introduced in Section 9.3. A hypothesis test consists of a test between two competing hypotheses: (1) a null hypothesis \(H_0\) versus (2) an alternative hypothesis \(H_A\).

Test statistic

A test statistic is a point estimator used for hypothesis testing. Here, the t-test statistic is given by

\[t = \frac{b_1 - B}{SE(b_1)}.\]

This test statistic follows, under the null hypothesis, a \(t\)-distribution with \(n-2\) degrees of freedom. A particularly useful test is whether there is a linear association between the explanatory variable and the response, which is equivalent to testing:

\[\begin{aligned} H_0: \beta_1 = 0\\ H_1: \beta_1 \ne 0 \end{aligned}\]

For example, we may use this test to determine whether there is a linear relationship between the duration of the Old Faithful geyser eruptions (duration) and the waiting time to the next eruption (waiting).

The null hypothesis \(H_0\) assumes that the population slope \(\beta_1\) is 0.

If this is true, then there is no linear relationship between the duration and waiting times.

When performing a hypothesis test, we assume that the null hypothesis \(H_0: \beta_1 = 0\) is true and try to find evidence against it based on the data observed.

The alternative hypothesis \(H_A\), on the other hand, states that the population slope \(\beta_1\) is not 0, meaning that longer eruption duration may result in greater or smaller waiting times to the next eruption.

This suggests either a positive or negative linear relationship between the explanatory variable and the response.

Since evidence against the null hypothesis may happen in either direction in this context, we call this a two-sided test.

The t-test statistic for this problem is given by:

\[t = \frac{b_1 - 0}{SE(b_1)} = \frac{0.371 - 0}{0.032} = 11.594\]

The p-value

Recall the terminology, notation, and definitions related to hypothesis tests we introduced in Section 9.3.

The definition of the \(p\)-value is the probability of obtaining a test statistic just as extreme as or more extreme than the one observed, assuming the null hypothesis \(H_0\) is true.

We can intuitively think of the \(p\)-value as quantifying how “extreme” the estimated slope is (\(b_1\) = 0.371), assuming there is no relationship between duration and waiting times.

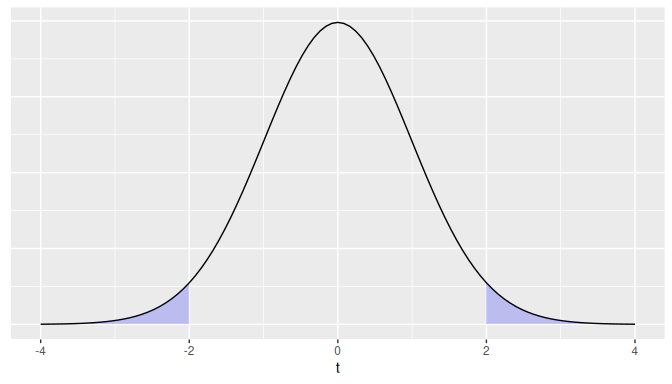

For a two-sided test, if the test statistic is \(t = 2\) for example, the \(p\)-value is calculated as the area under the \(t\)-curve to the left of \(-2\) and to the right of \(2\) is shown in Figure 10.4.

FIGURE 10.4: Illustration of a two-sided p-value for a t-test.

In our Old Faithful geyser eruptions example, the test statistic for the test \(H_0: \beta_1 = 0\) was \(t = 11.594\). The \(p\)-value was so small that R simply shows that it is equal to zero.

Interpretation

Following the hypothesis testing procedure we outlined in Section 9.5, since the \(p\)-value was practically 0, for any choice of significance level \(\alpha\), we would reject \(H_0\) in favor of \(H_A\).

In other words, assuming that there is no linear association between duration and waiting times, the probability of observing a slope as extreme as the one we have attained using our random sample, was practically zero.

In conclusion, we reject the null hypothesis that there is no linear relationship between duration and waiting times.

We have enough statistical evidence to conclude that there is a linear relationship between these variables.

Learning check

(LC10.4) In the context of a linear regression model, what does the null hypothesis \(H_0: \beta_1 = 0\) represent?

- A. There is no linear association between the response and the explanatory variable.

- B. The difference between the observed and predicted values is zero.

- C. The linear association between response and explanatory variable crosses the origin.

- D. The probability of committing a Type II Error is zero.

(LC10.5) Which of the following is an assumption of the linear regression model?

- A. The error terms \(\epsilon_i\) are normally distributed with constant variance.

- B. The error terms \(\epsilon_i\) have a non-zero mean.

- C. The error terms \(\epsilon_i\) are dependent on each other.

- D. The explanatory variable must be normally distributed.

(LC10.6) What does it mean when we say that the slope estimator \(b_1\) is a random variable?

- A. \(b_1\) will be the same for every sample taken from the population.

- B. \(b_1\) is a fixed value that does not change with different samples.

- C. \(b_1\) can vary from sample to sample due to sampling variation.

- D. \(b_1\) is always equal to the population slope \(\beta_1\).

10.2.5 The regression table in R

The least-square estimates, standard errors, test statistics, \(p\)-values, and confidence interval bounds discussed in Section 10.2 and Subsections 10.2.1, 10.2.2, 10.2.3, and 10.2.4 can be calculated, all at once, using the R wrapper function get_regression_table() from the moderndive package.

For model_1, the output is presented in Table 10.6.

get_regression_table(model_1)| term | estimate | std_error | statistic | p_value | lower_ci | upper_ci |

|---|---|---|---|---|---|---|

| intercept | 79.459 | 7.311 | 10.9 | 0 | 64.973 | 93.944 |

| duration | 0.371 | 0.032 | 11.4 | 0 | 0.307 | 0.435 |

Note that the first row in Table 10.6 addresses inferences for the intercept \(\beta_0\), and the second row deals with inference for the slope \(\beta_1\). The headers of the table present the information found for inference:

- The

estimatecolumn contains the least-squares estimates, \(b_0\) (first row) and \(b_1\) (second row). - The

std_errorcontains \(SE(b_0)\) and \(SE(b_1)\) (the standard errors for \(b_0\) and \(b_1\)), respectively. We defined these standard errors in Subsection 10.2.2. - The

statisticcolumn contains the \(t\)-test statistic for \(b_0\) (first row) and \(b_1\) (second row). If we focus on \(b_1\), the \(t\)-test statistic was constructed using the equation

\[ t = \frac{b_1 - 0}{SE(b_1)} = 11.594 \]

which corresponds to the hypotheses \(H_0: \beta_1 = 0\) versus \(H_A: \beta_1 \ne 0\).

- The

p_valueis the probability of obtaining a test statistic just as extreme as or more extreme than the one observed, assuming the null hypothesis is true. For this hypothesis test, the \(t\)-test statistic was equal to 11.594 and, therefore, the \(p\)-value was near zero, suggesting rejection of the null hypothesis in favor of the alternative. - The values

lower_ciandupper_ciare the lower and upper bounds of a 95% confidence interval for \(\beta_1\).

Please refer to previous subsections for the conceptual framework and a more detailed description of these quantities.

10.2.6 Model fit and model assumptions

We have introduced the linear model alongside assumptions about many of its elements and assumed all along that this is an appropriate representation of the relationship between the response and the explanatory variable. In real-life applications, it is uncertain whether the relationship is appropriately described by the linear model or whether all the assumptions we have introduced are satisfied.

Of course, we do not expect the linear model described in this chapter, or any other model, to be a perfect representation of a phenomenon presented in nature. Models are simplifications of reality in that they do not intend to represent exactly the relationship in question but rather provide useful approximations that help improve our understanding of this relationship. Even more, we want models that are as simple as possible and still capture relevant features of the natural phenomenon we are studying. This approach is known as the principle of parsimony or Occam’s razor.

But even with a simple model like a linear one, we still want to know if it accurately represents the relationship in the data. This is called model fit. In addition, we want to determine whether or not the model assumptions have been met.

There are four elements in the linear model we want to check. An acrostic is a composition in which certain letters from each piece form a word or words. To help you remember the four elements, we can use the following acrostic:

-

Linearity of relationship between variables

- Is the relationship between \(y_i\) and \(x_i\) truly linear for each \(i = 1, \dots, n\)? In other words, is the linear model \(y_i = \beta_0 + \beta_1 \cdot x_i + \epsilon_i\) a good fit?

-

Independence of each of the response values \(y_i\)

- Are \(y_i\) and \(y_j\) independent for any \(i \ne j\)?

-

Normality of the error terms

- Is the distribution of the error terms at least approximately normal?

-

Equality or constancy of the variance for \(y_i\) (and for the error term \(\epsilon_i\))

- Is the variance, or equivalently standard deviation, of the response \(y_i\) always the same, regardless of the fitted value (\(\widehat{y}_i\)) or the regressor value (\(x_i\))?

In this case, our acrostic follows the word LINE. This can serve as a nice reminder of what to check when using linear regression. To check for Linearity, Normality, and Equal or constant variance, we use the residuals of the linear regression via residual diagnostics as we explain in the next subsection. To check for Independence we can use the residuals if the data was collected using a time sequence or other type of sequences. Otherwise, independence may be achieved by taking a random sample, which eliminates a sequential type of dependency.

We start by reviewing how residuals are calculated, introduce residual diagnostics via visualizations, use the example of the Old Faithful geyser eruptions to determine whether each of the four LINE elements are met, and discuss the implications.

Residuals

Recall that given a random sample of \(n\) pairs \((x_1, y_1), \dots, (x_n,y_n)\) the linear regression was given by:

\[\widehat{y}_i = b_0 + b_1 \cdot x_i\]

for all the observations \(i = 1, dots,n\). Recall that the residual as defined in Subsection 5.1.3, is the observed response minus the fitted value. If we denote the residuals with the letter \(e\) we get:

\[e_i = y_i - \widehat{y}_i\]

for \(i = 1, \dots, n\). Combining these two formulas we get

\[y_i = \underline{\widehat{y}_i} + e_i = \underline{b_0 + b_1 \cdot x_i} + e_i\]

the resulting formula looks very similar to our linear model:

\[y_i = \beta_0 + \beta_1 \cdot x_i + \epsilon_i\]

In this context, residuals can be thought of as rough estimates of the error terms. Since many of the assumptions of the linear model are related to the error terms, we can check these assumptions by studying the residuals.

In Figure 10.5, we illustrate one particular residual for the Old Faithful geyser eruption where duration time is the explanatory variable and waiting time is the response.

We use an arrow to connect the observed waiting time (a circle) with the fitted waiting time (a square).

The vertical distance between these two points (or equivalently, the magnitude of the arrow) is the value of the residual for this observation.

FIGURE 10.5: Example of observed value, fitted value, and residual.

We can calculate all the \(n = 114\) residuals by applying the get_regression_points() function to the regression model model_1.

Observe how the resulting values of residual are roughly equal to waiting - waiting_hat (there may be a slight difference due to rounding error).

# Fit regression model:

model_1 <- lm(waiting ~ duration, data = old_faithful_2024)

# Get regression points:

fitted_and_residuals <- get_regression_points(model_1)

fitted_and_residuals# A tibble: 114 × 5

ID waiting duration waiting_hat residual

<int> <dbl> <dbl> <dbl> <dbl>

1 1 180 235 166.666 13.334

2 2 184 259 175.572 8.428

3 3 116 137 130.299 -14.299

4 4 188 222 161.842 26.158

5 5 106 105 118.424 -12.424

6 6 187 180 146.256 40.744

7 7 182 244 170.006 11.994

8 8 190 278 182.623 7.377

9 9 138 249 171.861 -33.861

10 10 186 262 176.686 9.314

# ℹ 104 more rowsResidual diagnostics

Residual diagnostics are used to verify conditions L, N, and E.

While there are more sophisticated statistical approaches that can be used, we focus on data visualization. One of the most useful plots is a residual plot, which is a scatterplot of the residuals against the fitted values.

We use the fitted_and_residuals object to draw the scatterplot using geom_point() with the fitted values (waiting_hat) on the x-axis and the residuals (residual) on the y-axis.

In addition, we add titles to our axes with labs() and draw a horizontal line at 0 for reference using geom_hline() and yintercept = 0, as shown in the following code:

ggplot(fitted_and_residuals, aes(x = waiting_hat, y = residual)) +

geom_point() +

labs(x = "duration", y = "residual") +

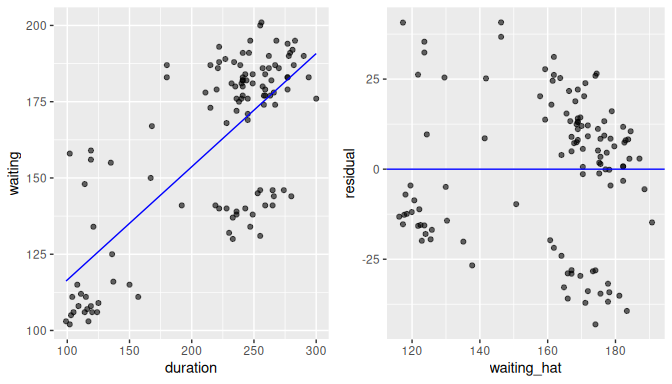

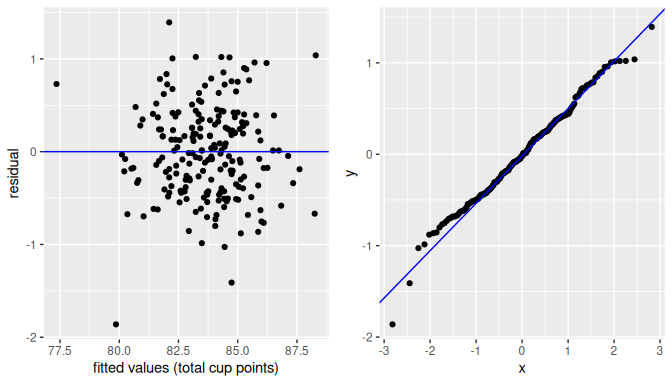

geom_hline(yintercept = 0, col = "blue")In Figure 10.6 we show this residual plot (right plot) alongside the scatterplot for duration vs waiting (left plot).

Note how the residuals on the left plot are determined by the vertical distance between the observed response and the linear regression.

On the right plot (residuals), we have removed the effect of the linear regression and the effect of the residuals is seen as the vertical distance from each point to the zero line (y-axis).

Using this residuals plot, it is easier to spot patterns or trends that may be in conflict with the assumptions of the model, as we describe next.

FIGURE 10.6: The scatterplot and residual plot for the Old Faithful data.

In what follows we show how the residual plot can be used to determine whether the linear model assumptions are met.

Linearity of relationship

We want to check whether the association between the response \(y_i\) and the explanatory variable \(x_i\) is Linear. We expect, due to the error term in the model, that the scatterplot of residuals against fitted values shows some random variation, but the variation should not be systematic in any direction and the trend should not show non-linear patterns.

A scatterplot of residuals against fitted values showing no patterns but simply a cloud of points that seems randomly assigned in every direction with the residuals’ variation (y-axis) about the same for any fitted values (x-axis) and with points located as much above as below the zero line is called a null plot. Plots of residuals against fitted values or regressors that are null plots do not show any evidence against the assumptions of the model. In other words, if we want our linear model to be adequate, we hope to see null plots when plotting residuals against fitted values.

This is largely the case for the Old Faithful geyser example with the residuals against the fitted values (waiting_hat) shown in the right-plot of Figure 10.6.

The residual plot is not a null plot as it appears there are some clusters of points as opposed to a complete random assignment, but there are not clear systematic trends in any direction or the appearance of a non-linear relationship.

So, based on this plot, we believe that the data does not violate the assumption of linearity.

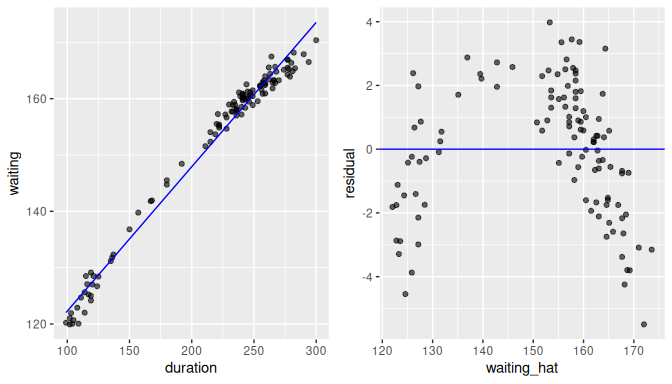

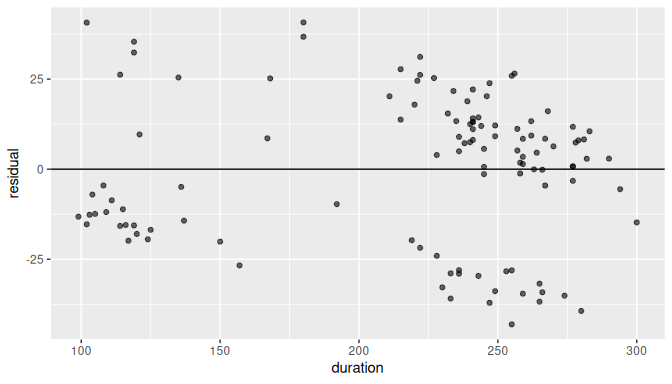

By contrast, assume now that the scatterplot of waiting against duration and its associated residual plot are shown in Figure 10.7.

We are not using the real data here, but simulated data.

A quick look at the scatterplot and regression line (left plot) could lead us to believe that the regression line is an appropriate summary of the relationship.

But if we look carefully, you may notice that the residuals for low values of duration are mostly below the regression line, residuals for values in the middle range of duration are mostly above the regression line, and residuals for large values of duration are again below the regression line.

This is the reason we prefer to use plots of residuals against fitted values (right plot) as we have removed the effect of the regression and can focus entirely on the residuals. The points clearly do not form a line, but rather a U-shaped polynomial curve. If this was the real data observed, using the linear regression with these data would produce results that are not valid or adequate.

FIGURE 10.7: Example of a non-linear relationship.

Independence of the error terms and the response

Another assumption we want to check is the Independence of the response values. If they are not independent, some patterns of dependency may appear in the observed data.

The residuals could be used for this purpose too as they are a rough approximation of the error terms.

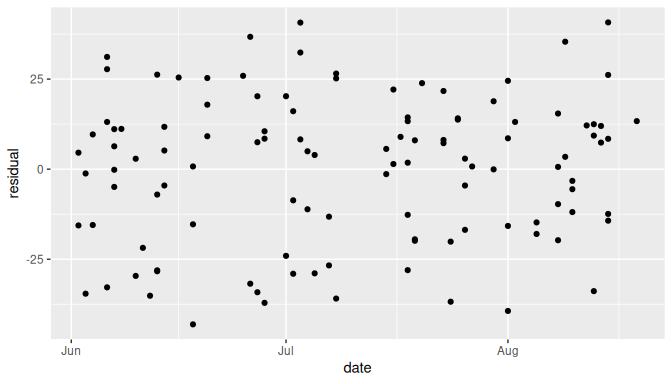

If data was collected in a time sequence or other type of sequence, the residuals may also help us determine lack of independence by plotting the residuals against time.

As it happens, the Old Faithful geyser eruption example does have a time component we can use: the old_faithful_2024 dataset contains the date variable.

We show the plot of residuals against date (time) in Figure 10.8:

FIGURE 10.8: Scatterplot of date (time) vs residuals for the Old Faithful example.

The plot of residuals against time (date) seems to be a null plot.

Based on this plot we could say that the residuals do not exhibit any evidence of dependency.

Now, the observations in this dataset are only a subset of all the Old Faithful geyser eruptions that happen during this time frame and most or all of them are eruptions that do not happened sequentially, one after the next.

Each observation in this dataset represents a unique eruption of Old Faithful, with waiting times and duration recorded separately for each event.

Since these eruptions occur independently of one another, the residuals derived from the regression of waiting versus duration are also expected to be independent.

As discussed in Subsection 10.1.3, we can consider this a random sample.

In this case, the assumption of independence seems acceptable.

Note that the old_faithful_2024 data do not involve repeated measurements or grouped observations that could lead to dependency issues.

Therefore, we can proceed with trusting the regression analysis as we believe that the error terms are not systematically related to one another.

While determining lack of independence may not be easy in certain settings, in particular if no time sequence or other sequence measurements are involved, taking a random sample is the golden standard.

Normality of the error terms

The third assumption we want to check is whether the error terms follow Normal distributions with expected value equal to zero. Using the residuals as a rough estimate of the error term values, we have seen in the right plot of Figure 10.6 that sometimes the residuals are positive and other times negative. We want to see if, on average, the errors equal zero and the shape of their distribution approximate a bell shaped curve.

We can use a histogram to visualize the distribution of the residuals:

ggplot(fitted_and_residuals, aes(residual)) +

geom_histogram(binwidth = 10, color = "white")We can also use a quantile-to-quantile plot or QQ-plot.

The QQ-plot creates a scatterplot of the quantiles (or percentiles) of the residuals against the quantiles of a normal distribution.

If the residuals follow approximately a normal distribution, the scatterplot would be a straight line of 45 degrees.

To draw a QQ-plot for the Old Faithful geyser eruptions example, we use the fitted_and_residuals data frame that contains the residuals of the regression, ggplot() with aes(sample = residual) and the geom_qq() function for drawing the QQ-plot.

We also include the function geom_qq_line() to add a 45-degree line for comparison:

fitted_and_residuals |>

ggplot(aes(sample = residual)) +

geom_qq() +

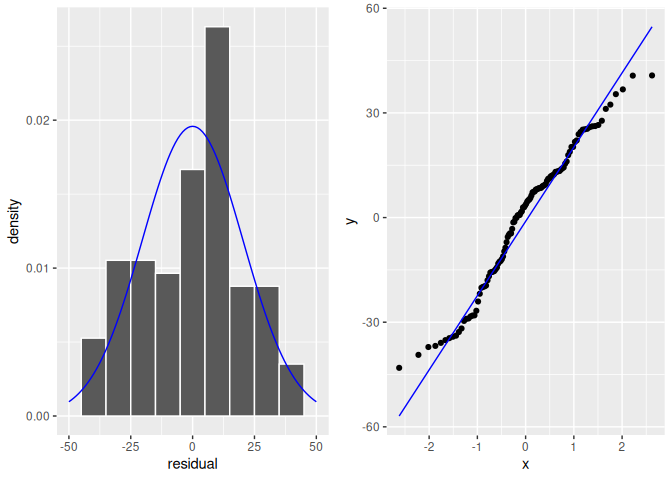

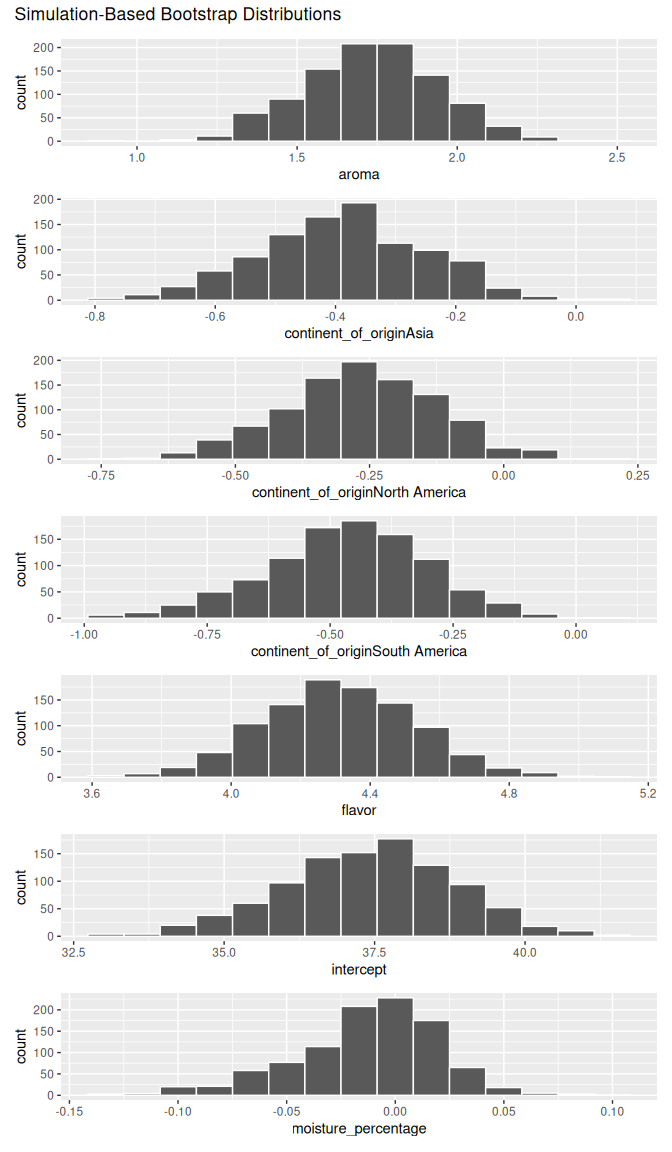

geom_qq_line()In Figure 10.9 we include both the histogram of the residuals including a normal curve for comparison (left plot) and a QQ-plot (right plot):

FIGURE 10.9: Histogram of residuals.

The histogram of the residuals shown in Figure 10.9 (left plot) does not appear exactly normal as there are some deviations, such as the highest bin value appearing just to the right of the center. But the histogram does not seem too far from normality either. The QQ-plot (right plot) supports this conclusion. The scatterplot is not exactly on the 45-degree line but it does not deviate much from it either.

We compare these results with residuals found by simulation that do not appear to follow normality as shown in Figure 10.10. In this case of the model yielding the clearly non-normal residuals on the right, any results from an inference for regression would not be valid.

FIGURE 10.10: Non-normal residuals.

Equality or constancy of variance for errors

The final assumption we check is the Equality or constancy of the variance for the error term across all fitted values or regressor values.

Constancy of variance is also known as homoskedasticity. Using the residuals again as rough estimates of the error terms, we want to check that the dispersion of the residuals is the same for any fitted value \(\widehat{y}_i\) or regressor \(x_i\).

In Figure 10.6, we showed the scatterplot of residuals against fitted values (right plot).

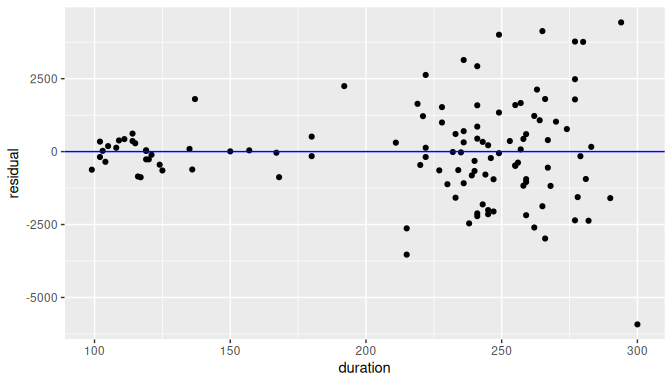

We can also produce the scatterplot of residuals against the regressor duration in Figure 10.11:

ggplot(fitted_and_residuals, aes(x = duration, y = residual)) +

geom_point(alpha = 0.6) +

labs(x = "duration", y = "residual") +

geom_hline(yintercept = 0)

FIGURE 10.11: Plot of residuals against the regressor.

With the exception of the change of scale on the x-axis, it is equivalent (for visualization purposes) to producing a plot of residuals (\(e_i\)) against either the fitted values (\(\widehat{y}_i\)) or the regressor values (\(x_i\)). This happens because the fitted values are a linear transformation of the regressor values, \(\widehat{y}_i = b_0 + b_1\cdot x_i\).

Observe the vertical dispersion or spread of the residuals for different values of duration:

- For

durationvalues between 100 and 150 seconds, the residual values are somewhere between -25 and 40, a spread of about 65 units. - For

durationvalues between 150 and 200 seconds, there are only a handful of observations and it is not clear what the spread is. - For

durationvalues between 200 and 250 seconds, the residual values are somewhere between -37 and 32, a spread of about 69 units. - For

durationvalues between 250 and 300 seconds, the residual values are somewhere between -42 and 27, a spread of about 69 units.

The spread is not exactly constant across all values of duration.

It seems to be slightly higher for greater values of duration, but there seems to be a larger number of observations for higher values of duration as well.

Observe also that there are two or three cluster of points and the dispersion of residuals is not completely uniform.

While the residual plot is not exactly a null plot, there is not clear evidence against the assumption of homoskedasticity.

We are not surprised to see plots such as this one when dealing with real data.

It is possible that the residual plot is not exactly a null plot, because there may be some information we are missing that could improve our model.

For example, we could include another regressor in our model.

Do not forget that we are using a linear model to approximate the relationship between duration and waiting times, and we do not expect the model to perfectly describe this relationship.

When you look at these plots, you are trying to find clear evidence of the data not meeting the assumptions used.

This example does not appear to violate the constant variance assumption.

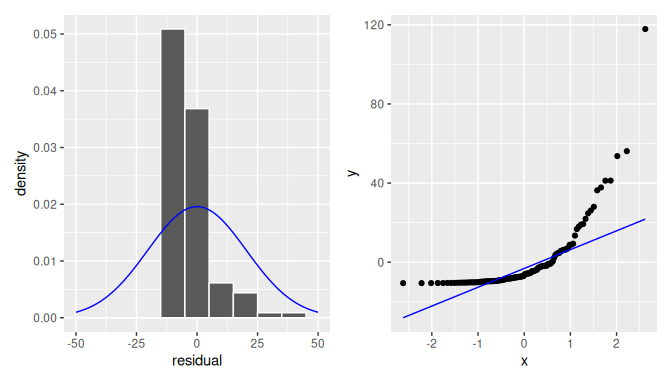

In Figure 10.12 we present an example using simulated data with non-constant variance.

FIGURE 10.12: Example of clearly non-equal variance.

Observe how the spread of the residuals increases as the regressor value increases. Lack of constant variance is also known as heteroskedasticity. When heteroskedasticity is present, some of the results such as the standard error of the least-square estimators, confidence intervals, or the conclusion for a related hypothesis test would not be valid.

What is the conclusion?

We did not find conclusive evidence against any of the assumptions of the model:

- Linearity of relationship between variables

- Independence of the error terms

- Normality of the error terms

- Equality or constancy of the variance

This does not mean that our model was perfectly adequate. For example, the residual plot was not a null plot and had some clusters of points that cannot be explained by the model. But overall, there were no trends that could be considered clear violations of the assumptions and the conclusions we get from this model may be valid.

What do we do when the assumptions are not met?

When there are clear violations of the assumptions in the model, all the results found may be suspect. In addition, there may be some remedial measures that can be taken to improve the model. None of these measures will be addressed here in depth as this material extends beyond the scope of this book, but we briefly discuss potential solutions for future reference.

When the Linearity of the relationship between variables is not met, a simple transformation of the regressor, the response, or both variables may solve the problem. An example of such a transformation is given in Appendix A online. If not, alternative methods such as spline regression, generalized linear models, or non-linear models may be used to address these situations. When additional regressors are available, including other regressors as in multiple linear regression may produce better results.

If the Independence assumption is not met, but the dependency is established by a variable within the data at hand, linear mixed-effects models can also be used. These models may also be referred to as hierarchical or multilevel models.

Small departures of the Normality of the error terms assumption are not too concerning and most of the results, including those related to confidence intervals and hypothesis tests, may still be valid. On the other hand, when the number of violations to the normality assumption is large, many of the results may no longer be valid. Using the advanced methods suggested earlier here may correct these problems too.

When the Equality or constancy of the variance is not met, adjusting the variance by adding weights to individual observations may be possible if relevant information is available that makes those weights known. This method is called weighted linear regression or weighted least squares, and it is a direct extension of the model we have studied. If information of the weights is not available, some methods can be used to provide an estimator for the internal structure of the variance in the model. One of the most popular of these methods is called the sandwich estimator.

Checking that the assumptions of the model are satisfied is a key component of regression analysis. Constructing and interpreting confidence intervals as well as conducting hypothesis tests and providing conclusions from the results of hypothesis tests are directly affected by whether or not assumptions are satisfied. At the same time, it is often the case with regression analysis that a level of subjectivity when visualizing and interpreting plots is present, and sometimes we are faced with difficult statistical decisions.

So what can be done? We suggest transparency and clarity in communicating results. It is important to highlight important elements that may suggest departures from relevant assumptions, and then provide pertinent conclusions. In this way, the stakeholders of any analysis are aware of the model’s shortcomings and can decide whether or not to agree with the conclusions presented to them.

Learning check

(LC10.7) Use the un_member_states_2024 data frame included in the moderndive package with response variable fertility rate (fertility_rate_2022) and the regressor human development index (hdi_2022). Make sure to omit missing values in un_member_states_2024.

- Use the

get_regression_points()function to get the observed values, fitted values, and residuals for all UN member countries. - Perform a residual analysis and look for any systematic patterns in the residuals. Ideally, there should be little to no pattern but comment on what you find here.

(LC10.8) In the context of linear regression, a p_value of near zero for the slope coefficient suggests which of the following?

- A. The intercept is statistically significant at a 95% confidence level.

- B. There is strong evidence against the null hypothesis that the slope coefficient is zero, suggesting there exists a linear relationship between the explanatory and response variables.

- C. The variance of the response variable is significantly greater than the variance of the explanatory variable.

- D. The residuals are normally distributed with mean zero and constant variance.

(LC10.9) Explain whether or not the residual plot helps assess each one of the following assumptions.

- Linearity of the relationship between variables

- Independence of the error terms

- Normality of the error terms

- Equality or constancy of variance

(LC10.10) If the residual plot against fitted values shows a “U-shaped” pattern, what does this suggest?

- A. The variance of the residuals is constant.

- B. The linearity assumption is violated.

- C. The independence assumption is violated.

- D. The normality assumption is satisfied.

10.3 Simulation-based inference for simple linear regression

In this section, we’ll use the simulation-based methods you previously learned in Chapters 8 and 9 to recreate the values in the regression table.

In particular, we’ll use the infer package workflow to

- Construct a 95% confidence interval for the population slope \(\beta_1\) using bootstrap resampling with replacement. We did this previously in Sections 8.2.2 with the almonds data and 8.4 with the

mythbusters_yawndata. - Conduct a hypothesis test of \(H_0: \beta_1 = 0\) versus \(H_A: \beta_1 \neq 0\) using a permutation test. We did this previously in Sections 9.4 with the

spotify_sampledata and 9.6 with themovies_sampleIMDb data.

10.3.1 Confidence intervals for the population slope using infer

We’ll construct a 95% confidence interval for \(\beta_1\) using the infer workflow outlined in Subsection 8.2.3. Specifically, we’ll first construct the bootstrap distribution for the fitted slope \(b_1\) using our single sample of 114 eruptions:

-

specify()the variables of interest inold_faithful_2024with the formula:waiting ~ duration. -

generate()replicates by usingbootstrapresampling with replacement from the original sample of 114 courses. We generatereps = 1000replicates usingtype = "bootstrap". -

calculate()the summary statistic of interest: the fittedslope\(b_1\).

Using this bootstrap distribution, we’ll construct the 95% confidence interval using the percentile method and (if appropriate) the standard error method as well. It is important to note in this case that the bootstrapping with replacement is done row-by-row. Thus, the original pairs of waiting and duration values are always kept together, but different pairs of waiting and duration values may be resampled multiple times. The resulting confidence interval will denote a range of plausible values for the unknown population slope \(\beta_1\) quantifying the relationship between waiting times and duration for Old Faithful eruptions.

Let’s first construct the bootstrap distribution for the fitted slope \(b_1\):

bootstrap_distn_slope <- old_faithful_2024 |>

specify(formula = waiting ~ duration) |>

generate(reps = 1000, type = "bootstrap") |>

calculate(stat = "slope")

bootstrap_distn_slopeResponse: waiting (numeric)

Explanatory: duration (numeric)

# A tibble: 1,000 × 2

replicate stat

<int> <dbl>

1 1 0.334197

2 2 0.331819

3 3 0.385334

4 4 0.380571

5 5 0.369226

6 6 0.370921

7 7 0.337145

8 8 0.417517

9 9 0.343136

10 10 0.359239

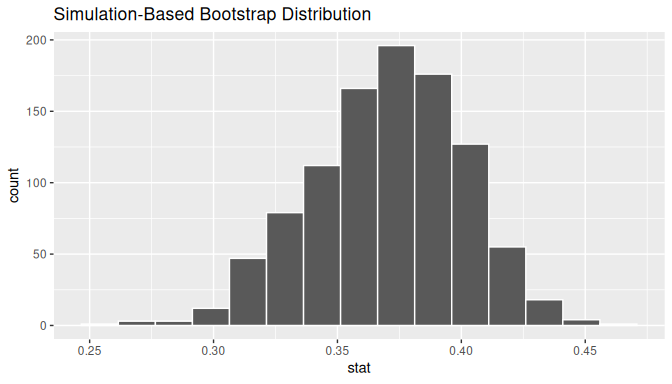

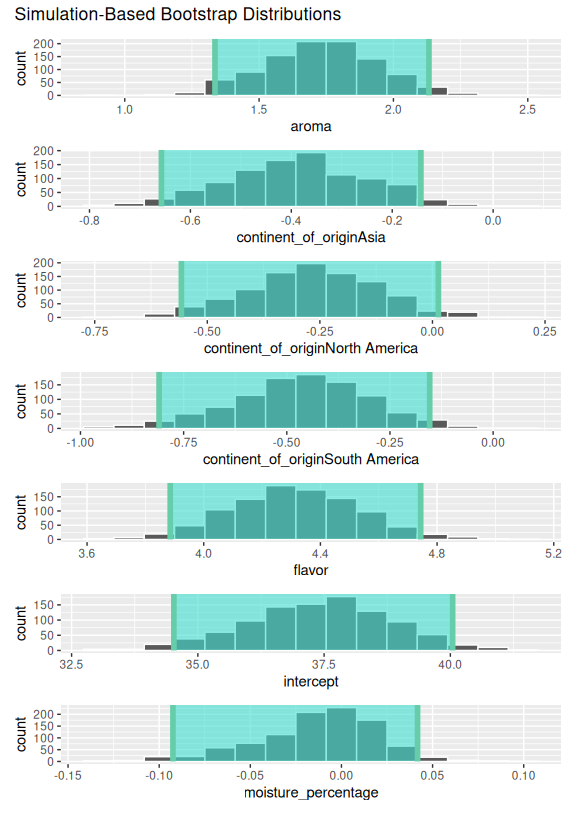

# ℹ 990 more rowsObserve how we have 1000 values of the bootstrapped slope \(b_1\) in the stat column. Let’s visualize the 1000 bootstrapped values in Figure 10.13.

visualize(bootstrap_distn_slope)

FIGURE 10.13: Bootstrap distribution of slope.

Observe how the bootstrap distribution is roughly bell-shaped. Recall from Subsection 8.2.1 that the shape of the bootstrap distribution of \(b_1\) closely approximates the shape of the sampling distribution of \(b_1\).

Percentile-method

First, let’s compute the 95% confidence interval for \(\beta_1\) using the percentile method. We’ll do so by identifying the 2.5th and 97.5th percentiles which include the middle 95% of values. Recall that this method does not require the bootstrap distribution to be normally shaped.

percentile_ci <- bootstrap_distn_slope |>

get_confidence_interval(type = "percentile", level = 0.95)

percentile_ci# A tibble: 1 × 2

lower_ci upper_ci

<dbl> <dbl>

1 0.309088 0.425198The resulting percentile-based 95% confidence interval for \(\beta_1\) of (0.309, 0.425).

Standard error method

Since the bootstrap distribution in Figure 10.13 appears to be roughly bell-shaped, we can also construct a 95% confidence interval for \(\beta_1\) using the standard error method.

In order to do this, we need to first compute the fitted slope \(b_1\), which will act as the center of our standard error-based confidence interval. While we saw in the regression table in Table 10.6 that this was \(b_1\) = 0.371, we can also use the infer pipeline with the generate() step removed to calculate it:

observed_slope <- old_faithful_2024 |>

specify(waiting ~ duration) |>

calculate(stat = "slope")

observed_slopeResponse: waiting (numeric)

Explanatory: duration (numeric)

# A tibble: 1 × 1

stat

<dbl>

1 0.371095We then use the get_ci() function with level = 0.95 to compute the 95% confidence interval for \(\beta_1\). Note that setting the point_estimate argument to the observed_slope of 0.371 sets the center of the confidence interval.

se_ci <- bootstrap_distn_slope |>

get_ci(level = 0.95, type = "se", point_estimate = observed_slope)

se_ci# A tibble: 1 × 2

lower_ci upper_ci

<dbl> <dbl>

1 0.311278 0.430912The resulting standard error-based 95% confidence interval for \(\beta_1\) of \((0.311, 0.431)\) is slightly different than the percentile-based confidence interval. Note that neither of these confidence intervals contains 0 and is entirely located above 0. This is suggesting that there is in fact a meaningful positive relationship between waiting times and duration for Old Faithful eruptions.

10.3.2 Hypothesis test for population slope using infer

Let’s now conduct a hypothesis test of \(H_0: \beta_1 = 0\) vs. \(H_A: \beta_1 \neq 0\). We will use the infer package, which follows the hypothesis testing paradigm in the “There is only one test” diagram in Figure 9.11.

Let’s first think about what it means for \(\beta_1\) to be zero as assumed in the null hypothesis \(H_0\). Recall we said if \(\beta_1 = 0\), then this is saying there is no relationship between the waiting time and duration. Thus, assuming this particular null hypothesis \(H_0\) means that in our “hypothesized universe” there is no relationship between waiting and duration. We can therefore shuffle/permute the waiting variable to no consequence.

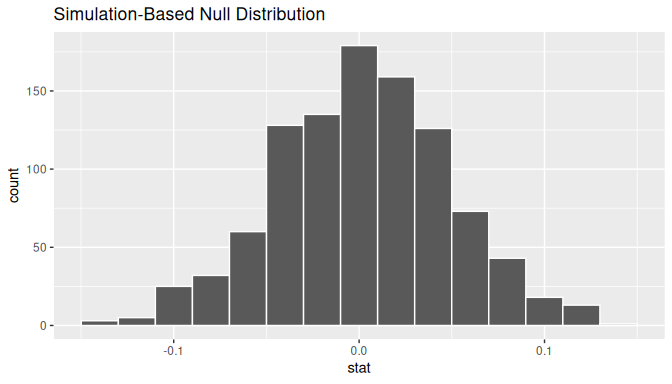

We construct the null distribution of the fitted slope \(b_1\) by performing the steps that follow. Recall from Section 9.3 on terminology, notation, and definitions related to hypothesis testing where we defined the null distribution: the sampling distribution of our test statistic \(b_1\) assuming the null hypothesis \(H_0\) is true.

-

specify()the variables of interest inold_faithful_2024with the formula:waiting ~ duration. -

hypothesize()the null hypothesis ofindependence. Recall from Section 9.4 that this is an additional step that needs to be added for hypothesis testing. -

generate()replicates by permuting/shuffling values from the original sample of 114 eruptions. We generatereps = 1000replicates usingtype = "permute"here. -

calculate()the test statistic of interest: the fittedslope\(b_1\).

In this case, we permute the values of waiting across the values of duration 1000 times. We can do this shuffling/permuting since we assumed a “hypothesized universe” of no relationship between these two variables. Then we calculate the "slope" coefficient for each of these 1000 generated samples.

null_distn_slope <- old_faithful_2024 |>

specify(waiting ~ duration) |>

hypothesize(null = "independence") |>

generate(reps = 1000, type = "permute") |>

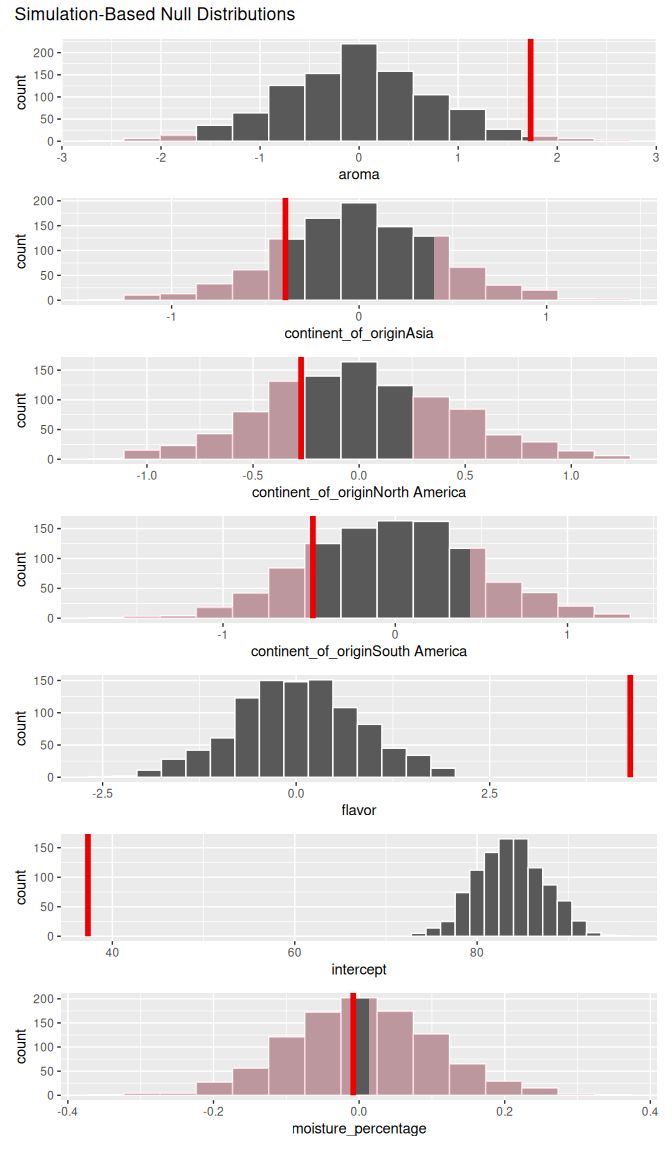

calculate(stat = "slope")Observe the resulting null distribution for the fitted slope \(b_1\) in Figure 10.14.

FIGURE 10.14: Null distribution of slopes.

Notice how it is centered at \(b_1\) = 0. This is because in our hypothesized universe, there is no relationship between waiting and duration and so \(\beta_1 = 0\). Thus, the most typical fitted slope \(b_1\) we observe across our simulations is 0. Observe, furthermore, how there is variation around this central value of 0.

# Observed slope

b1 <- old_faithful_2024 |>

specify(waiting ~ duration) |>

calculate(stat = "slope")

b1Response: waiting (numeric)

Explanatory: duration (numeric)

# A tibble: 1 × 1

stat

<dbl>

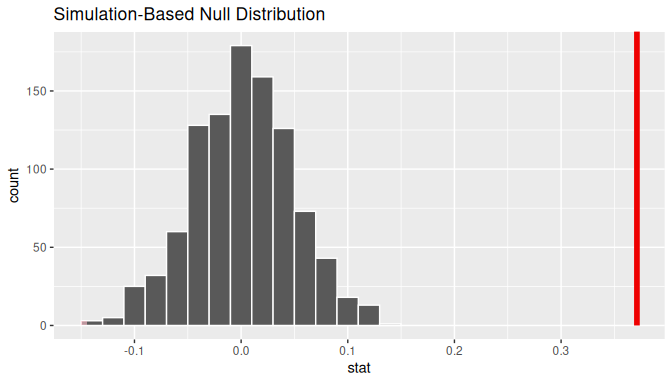

1 0.371095Let’s visualize the \(p\)-value in the null distribution by comparing it to the observed test statistic of \(b_1\) = 0.371 in Figure 10.15. We’ll do this by adding a shade_p_value() layer to the previous visualize() code.

FIGURE 10.15: Null distribution and \(p\)-value.

Since the observed fitted slope 0.371 falls far to the right of this null distribution and thus the shaded region doesn’t overlap it, we’ll have a \(p\)-value of 0. For completeness, however, let’s compute the numerical value of the \(p\)-value anyways using the get_p_value() function. Recall that it takes the same inputs as the shade_p_value() function:

null_distn_slope |>

get_p_value(obs_stat = b1, direction = "both")# A tibble: 1 × 1

p_value

<dbl>

1 0This matches the \(p\)-value of 0 in the regression table. We therefore reject the null hypothesis \(H_0: \beta_1 = 0\) in favor of the alternative hypothesis \(H_A: \beta_1 \neq 0\). We thus have evidence that suggests there is a significant relationship between waiting time and duration values for eruptions of Old Faithful.

When the conditions for inference for regression are met and the null distribution has a bell shape, we are likely to see similar results between the simulation-based results we just demonstrated and the theory-based results shown in the regression table.

Learning check

(LC10.11) Repeat the inference but this time for the correlation coefficient instead of the slope. Note the implementation of stat = "correlation" in the calculate() function of the infer package.

(LC10.12) Why is it appropriate to use the bootstrap percentile method to construct a 95% confidence interval for the population slope \(\beta_1\) in the Old Faithful data?

- A. Because it assumes the slope follows a perfect normal distribution.

- B. Because it relies on resampling the residuals instead of the original data points.

- C. Because it requires the original data to be uniformly distributed.

- D. Because it does not require the bootstrap distribution to be normally shaped.

(LC10.13) What is the role of the permutation test in the hypothesis testing for the population slope \(\beta_1\)?

- A. It generates new samples to confirm the confidence interval boundaries.

- B. It assesses whether the observed slope could have occurred by chance under the null hypothesis of no relationship.

- C. It adjusts the sample size to reduce sampling variability.

- D. It ensures the residuals of the regression model are normally distributed.

(LC10.14) After generating a null distribution for the slope using infer, you find the \(p\)-value to be near 0. What does this indicate about the relationship between waiting and duration in the Old Faithful data?

- A. There is no evidence of a relationship between

waitingandduration. - B. The observed slope is likely due to random variation under the null hypothesis.

- C. The observed slope is significantly different from zero, suggesting a meaningful relationship between

waitingandduration. - D. The null hypothesis cannot be rejected because the \(p\)-value is too small.

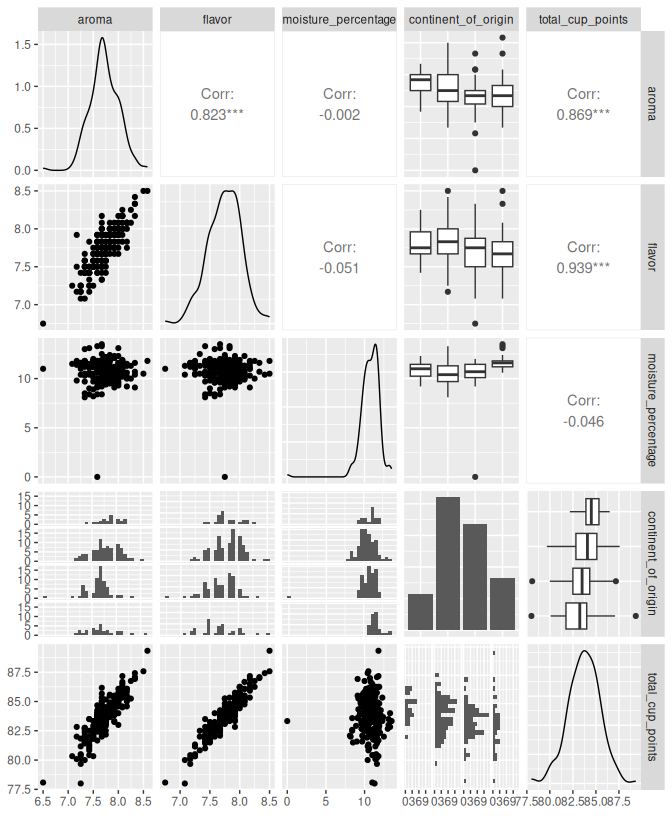

10.4 The multiple linear regression model

10.4.1 The model

The extension from a simple to a multiple regression model is discussed next. We assume that a population has a response variable (\(Y\)) and two or more explanatory variables (\(X_1, X_2, \dots, X_p\)) with \(p \ge 2\). The statistical linear relationship between these variables is given by

\[Y = \beta_0 + \beta_1 \cdot X_1 + \dots + \beta_p X_p + \epsilon\] where \(\beta_0\) is the population intercept and \(\beta_j\) is the population partial slope related to regressor \(X_j\). The error term \(\epsilon\) accounts for the portion of \(Y\) that is not explained by the line. As in the simple case, we assume that the expected value is \(E(\epsilon) = 0\), the standard deviation is \(SD(\epsilon) = \sigma\), and the variance is \(Var(\epsilon) = \sigma^2\). The variance and standard deviation are constant regardless of the value of \(X_1, X_2, \dots, X_p\). If you were to take a large number of observations from this population, we expect the error terms sometimes to be greater than zero and other times less than zero, but on average equal to zero, give or take \(\sigma\) units away from zero.